PCE Storage Device Layout

You should create separate storage device partitions to reserve the amount of space specified below. These recommendations are based on PCE Capacity Planning.

The values given in these recommendation tables are guidelines based on testing in Illumio’s labs. If you wish to deviate from these recommendations based on your own platform standards, please first contact your Illumio support representative for advice and approval.

PCE Single-Node Cluster for 250 VENs

|

Storage Device |

Partition mount point |

Size to Allocate |

Node Types |

Notes |

|---|---|---|---|---|

|

Device 1, Partition A |

/

|

8GB |

Core, Data |

The size of this partition assumes the system temporary files are stored in /tmp and core dump file size is set to zero. The PCE installation occupies approximately 500MB of this space. |

|

Device 1, Partition B |

/var/log

|

16GB |

Core, Data |

The size of this partition assumes that PCE application logs and system logs are both stored in |

|

Device 1, Partition C |

/var/lib/illumio-pce

|

Balance of Device 1 |

Core, Data |

The size of this partition assumes that Core nodes use local storage for application code in /var/lib/illumio-pce, and also assumes that PCE support report files, and other temporary (ephemeral) files, etc., are stored in /var/lib/illumio-pce/tmp. |

PCE 2x2 Multi-Node Cluster for 2,500 VENs

|

Storage Device |

Partition mount point |

Size to Allocate |

Node Types |

Notes |

|---|---|---|---|---|

|

Device 1, Partition A |

/

|

16GB |

Core, Data |

The size of this partition assumes the system temporary files are stored in /tmp and core dump file size is set to zero. |

|

Device 1, Partition B |

/var/log

|

32GB |

Core, Data |

The size of this partition assumes that PCE application logs and system logs are both stored in PCE application logs are stored in the |

|

Device 1, Partition C |

/var/lib/illumio-pce

|

Balance of Device 1 |

Core, Data |

The size of this partition assumes that Core nodes use local storage for application code in /var/lib/illumio-pce, and also assumes that PCE support report files, and other temporary (ephemeral) files, etc. are stored in /var/lib/illumio-pce/tmp. |

|

Device 2, Single partition. |

/var/lib/illumio-pce/data/Explorer

|

All of Device 2 (250GB) |

Data |

For network traffic data in a two-storage-device configuration for the data nodes, it should be a separate device that is mounted on this directory. Set the

The partition mount point and the runtime setting must match. If you customize the mount point, make sure that you also change the runtime setting accordingly. |

PCE 2x2 Multi-Node Cluster for 10,000 VENs and

PCE 4x2 Multi-Node Cluster for 25,000 VENs

|

Storage Device |

Partition mount point |

Size to Allocate |

Node Types |

Notes |

|---|---|---|---|---|

|

Device 1, Partition A |

/

|

16GB |

Core, Data |

The size of this partition assumes the system temporary files are stored in /tmp and core dump file size is set to zero. |

|

Device 1, Partition B |

/var/log

|

32GB |

Core, Data |

The size of this partition assumes that PCE application logs and system logs are both stored in PCE application logs are stored in the |

|

Device 1, Partition C |

/var/lib/illumio-pce

|

Balance of Device 1 |

Core, Data |

The size of this partition assumes that Core nodes use local storage for application code in /var/lib/illumio-pce, and also assumes that PCE support report files, and other temporary (ephemeral) files, etc. are stored in /var/lib/illumio-pce/tmp. |

|

Device 2, Single Partition |

|

All of Device 2 (1TB) |

Data |

For network traffic data in a two-storage-device configuration for the data nodes, it should be a separate device that is mounted on this directory. In The partition mount point and the runtime setting must match. If you customize the mount point, make sure that you also change the runtime setting accordingly. |

Runtime Parameters for Traffic Datastore on Data Nodes

For the traffic datastore, set the following parameters in runtime_env.yml:

traffic_datastore:

data_dir:path_to_second_disk (e.g. /var/lib/illumio-pce/data/traffic)

max_disk_usage_gb: Set this parameter according to the table below.

partition_fraction: Set this parameter according to the table below.

The recommended values for the above parameters, based on PCE node cluster type and estimated number of workloads (VENs), are as follows:

|

Setting |

2x2 | 2,500 VENs |

2x2 | 10,000 VENs |

4x2 | 25,000 VENs |

Note |

|---|---|---|---|---|

traffic_datastore:max_disk_usage_gb

|

100 GB |

400 GB |

400 GB |

This size reflects only part of the required total size, as detailed in PCE Capacity Planning. The remaining disk capacity is needed for database internals and data migration during upgrades. |

traffic_datastore:partition_fraction

|

0.5 |

0.5 |

0.5 |

|

For additional ways to avoid disk capacity issues, see Manage Data and Disk Capacity in the PCE Administration Guide.

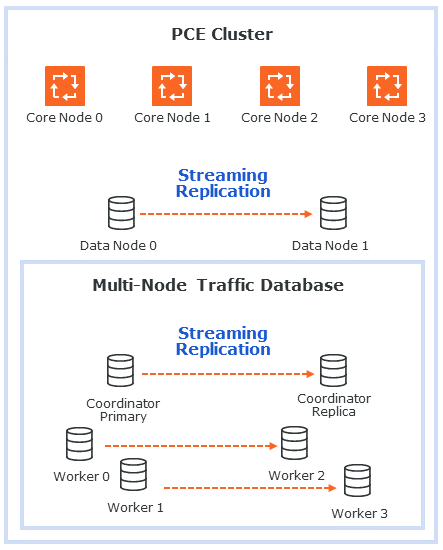

Scale Traffic Database to Multiple Nodes

When deploying the PCE, you can scale traffic data by sharding it across multiple PCE data nodes. In this way, you can store more data and improve the performance of read and write operations on traffic data. The traffic database is sharded by setting up two coordinator nodes, each of which has at least one pair of worker nodes.

Hardware Requirements for Multi-Node Traffic Database

The following table shows the minimum required resources for a multi-node traffic database.

|

CPU |

RAM |

Storage |

IOPS |

|---|---|---|---|

| 16 vCPU |

128GB |

1TB |

5,000 |

Cluster Types for Multi-Node Traffic Database

The following PCE cluster types support scaling the traffic database to multiple nodes:

4node_dx- 2x2 PCE with multi-node traffic database. The 2x2 numbers do not include the coordinator and worker nodes.6node_dx- 4x2 PCE with multi-node traffic database. The 4x2 numbers do not include the coordinator and worker nodes.

Node Types for Multi-Node Traffic Database

The following PCE node types support scaling the traffic database to multiple nodes:

citus_coordinator- The sharding module communicates with the PCE through the coordinator node. There must be two (2) coordinator nodes in the PCE cluster. The two nodes provide high availability. If one node goes down, the other takes over.citus_worker- The PCE cluster can have any even number of worker nodes, as long as there are at least two (2) pairs. As with the coordinator nodes, the worker node pairs provide high availability.

Runtime Parameters for Multi-Node Traffic Database

The following runtime parameters in runtime_env.yml support scaling the traffic database to multiple nodes:

traffic_datastore:num_worker_nodes- Number of traffic database worker node pairs. The worker nodes must be added to the PCE cluster in sets of two. This supports high availability (HA). For example, if there are 4 worker nodes,num_worker_nodesis 2.node_type- This runtime parameter can be assigned one of the valuescitus_coordinatorandcitus_worker. They are used to configure coordinator and worker nodes.datacenter- In a multi-datacenter deployment, the value of this parameter tells which datacenter the node is in. The value is any desired descriptive name, such as "west" and "east."

Set Up a Multi-Node Database

When setting up a new PCE cluster with a multi-node traffic database, use the same installation steps as usual, with the following additions.

-

Install the PCE software on core, data, coordinator, and worker nodes, using the same version of the PCE on all nodes.

-

There must be exactly two (2) coordinator nodes. There must be two (2) or more pairs of worker nodes.

-

Set up the

runtime_env.ymlconfiguration on every node as follows. For examples, see Example Configurations for Multi-Node Traffic Database.-

Set the cluster type to

4node_dxfor a 2x2 PCE or6node_dxfor a 4x2 PCE. -

In the

traffic_datastoresection, setnum_worker_nodesto the number of worker node pairs. For example, if the PCE cluster has 4 worker nodes, set this parameter to 2. -

On each coordinator node, in addition to the settings already desribed, set

node_typetocitus_coordinator. -

On each worker node, in addition to the settings already desribed, set

node_typetocitus_worker. -

If you are using a split-datacenter deployment, set the

datacenterparameter on each node to an arbitrary value that indicates what part of the datacenter the node is in.

-

For installation steps, see Install the PCE and UI for a new PCE, or Upgrade the PCE for an existing PCE.

Example Configurations for Multi-Node Traffic Database

Following is a sample configuration for a coordinator node. This node is in a 4x2 PCE cluster (not counting the coordinator and worker nodes) with two pairs of worker nodes:

cluster_type: 6node_dx

node_type: citus_coordinator

traffic_datastore:

num_worker_nodes: 2Following is a sample configuration for a worker node. This node is in a 4x2 PCE cluster (not counting the coordinator and worker nodes) with two pairs of worker nodes:

cluster_type: 6node_dx

node_type: citus_worker

traffic_datastore:

num_worker_nodes: 2Following is a sample configuration for a split-datacenter configuration.

The following settings are for nodes on the left side of the datacenter:

cluster_type: 6node_dx

traffic_datastore:

num_worker_nodes: 2

datacenter: leftThe following settings are for nodes on the right side of the datacenter:

cluster_type: 6node_dx

traffic_datastore:

num_worker_nodes: 2

datacenter: right